The new reality of hardware

In modern hardware engineering, every product launch feels like a launch window. Development cycles are shrinking, competition is accelerating, and one missed milestone can cascade into lost market share or revenue. The pressure is particularly acute in industries like aerospace, automotive, and consumer electronics, where complexity is climbing and tolerance for rework is vanishing.

The results are clear

🗓️ Each board respin adds 4–6 weeks to a schedule

💰 Direct costs range from $50,000 to $100,000 per spin

💲 Opportunity costs, missed market entry, delayed customer commitments, eroded reputation

Larger companies can no longer afford “good enough” processes. Just as CAD and PLM once became indispensable foundations for hardware teams, AI is emerging as the new product readiness catalyst.

From PLM to AI: a familiar adoption curve

When product lifecycle management (PLM) systems were first introduced, they faced skepticism. Engineers worried about extra steps. Managers worried about cost. Adoption lagged until complexity forced the issue. A single aircraft program might involve thousands of suppliers and millions of parts over decades of support—coordination without PLM was untenable. Eventually, PLM became the backbone of aerospace and beyond. It standardized processes, tracked revisions, and aligned distributed teams. What was once perceived as bureaucracy turned into critical infrastructure. AI in hardware is following the same arc. At first glance, it looks like another tool in search of a problem. Engineers are right to be skeptical. But as product complexity rises and timelines shrink, traditional methods no longer scale. Just as PLM once enabled global coordination, AI is emerging as the foundation for speed, safety, and confidence in hardware design.

The cost of friction

Manual checks and inconsistent processes introduce drag across the lifecycle: Respins: schematic errors, footprint mismatches, or library inconsistencies detected too late Onboarding: months of shadowing required before new engineers can operate independently Library drift: subtle symbol variations create downstream errors and confusion Review bottlenecks: engineers hesitate to move forward without confidence in their safety nets

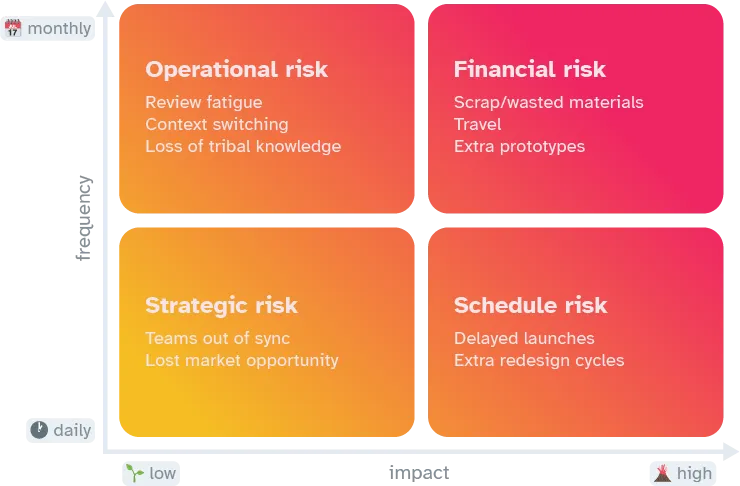

A reframed risk matrix makes the tradeoffs obvious. Errors that are both frequent and high impact—like net mismatches, wrong footprints, or stale libraries—are the first wins for AI review agents. Addressing these early saves the most time and money.

Some common areas of risk that can surface for hardware teams and their relative frequency / impact:

The Quality Maturity Model: How to level up your engineering organization

The Quality Maturity Model (QMM) is a practical way to evaluate where your organization stands today, and what it will take to get to the next level. It’s adapted from the well-known Capability Maturity Model (CMM), originally developed at Carnegie Mellon University’s Software Engineering Institute in the late 1980s to improve software engineering processes.

Just as CMM helped software teams move from “code and pray” to disciplined, predictable delivery, QMM helps electronics teams move from “build and hope” to designing, sourcing, and manufacturing with confidence and consistency.

The five levels of quality maturity

Level 1 — Initial (chaotic)

Processes are ad hoc, undocumented, and dependent on individual heroics. Every project feels like starting from scratch.

Example: A small hardware startup where every engineer uses their own CAD libraries and file naming, and there’s no consistent review process.

Level 2 — Repeatable

Some standard processes exist, and past successes can be repeated — but execution depends heavily on individual discipline.

Example: A contract manufacturer that runs DFM checks for most projects, but has no consistent process for tracking supplier quality.

Level 3 — Defined

Processes are standardized, documented, and enforced across the organization. Design data lives in a single source of truth.

Example: An automotive electronics supplier whose every ECU design flows through the same gated review process for manufacturability, compliance, and safety.

Level 4 — Managed

Processes are quantitatively measured and controlled. KPIs track yield, defect rates, review times, and supplier performance.

Example: A medical device company with real-time dashboards that flag trends during pilot builds and trigger early design intervention.

Level 5 — Optimizing

Continuous improvement is ingrained in culture and tooling. Lessons learned are captured and implemented, and automation is applied wherever possible.

Example: An aerospace manufacturer that uses CI for ECAD and automated DFM checks to maintain near-zero defect escapes across multiple product generations.

For each statement, rate yourself:

✏️ Design practices

☐ We have a single, version-controlled design library.

☐ All designs go through a documented, standardized review process.

☐ Review checklists cover manufacturability, compliance, and reliability.

⚙️ Process discipline

☐ BOMs, drawings, and manufacturing files are stored in a single source of truth.

☐ Engineering changes follow a formal, documented change control process.

☐ Reviews are conducted early and iteratively, not just before release.

📐 Measurement and control

☐ We track KPIs like defect escape rate, average review time, and first-pass yield.

☐ We can predict schedule or quality risks before they cause delays.

☐ Supplier quality is measured and informs sourcing decisions.

📊 Continuous improvement

☐ Lessons learned are systematically captured and integrated into processes.

☐ We use automation to catch issues before human review.

☐ Process improvements are prioritized and implemented regularly.

Scoring

🌱 0–7 points → Level 1–2 (Initial/Repeatable) — quality is inconsistent and risky.

🌿 8–14 points → Level 3 (Defined) — standardized, but could benefit from metrics and automation.

🌳 15–20 points → Level 4 (Managed) — data-driven, with room for continuous improvement.

🚀 21–24 points → Level 5 (Optimizing) — quality is a competitive advantage.

Why this matters now

In high-reliability industries — medical devices, automotive, aerospace — regulatory, safety, and reputational stakes are high. A maturity gap doesn’t just mean higher costs; it can mean lost market access, delayed certifications, or permanent damage to customer trust.

Even outside regulated sectors, low maturity increases the risk of missed launches, supply chain chaos, and eroded margins. The further you are from Level 5, the harder it is to respond quickly to market shifts — and the easier it is for competitors with higher maturity to take your customers.

Quality Maturity Model (QMM) - Ladder of quality

Proof points: outcomes AI is already delivering

Hardware teams adopting AI are reporting tangible results: Fewer board spins: Early schematic linting tools catch mismatched nets or mis-assigned pins before layout. One aerospace supplier reported a 30 percent reduction in respins within the first year.

Less grunt work: Automated datasheet cross-checks flag errors invisible to tired eyes but costly in production. Engineers reclaim hours once lost to repetitive manual reviews.

Faster onboarding: AI-guided design reviews act like simulators for new hires, walking them through datasheet checks and schematic best practices. Ramp-up time shrinks from months to weeks.

Cleaner libraries: AI detects subtle symbol and footprint discrepancies across projects. Instead of managing a messy drawer of parts, teams converge on a single source of truth.

Quicker prototyping: By compressing validation cycles and eliminating obvious design errors up front, teams cut weeks from prototype bring-up. For VPs, that is the difference between hitting this quarter’s launch window or waiting for the next.

The psychology of skepticism

Skepticism is built into engineering culture. Engineers do not adopt tools because they sound futuristic—they adopt them when the tools prevent errors and save hours. That is exactly how CAD, PLM, and simulation won acceptance.

The same pattern is playing out with AI. Early pilots prove value on live designs. Engineers see fewer errors in reviews, managers see faster onboarding, and executives see schedules holding. Once the tools demonstrate their worth, cultural resistance fades quickly.

Where this is heading

The adoption path is becoming clearer:

- Design review agent: continuous linting of schematics, footprints, and BOMs

- Design assistant with templates: reusable commands for common tasks (power tables, BOM analysis)

- Guided fixes: AI not only detects issues but proposes scoped corrections for human approval

- Prompted generation: agentic tools capable of implementing bounded design updates on command

At every stage, richer organizational context—libraries, standards, and past designs—feeds smarter recommendations.

Every missed launch window costs millions. Distributed teams, tighter margins, and escalating complexity mean that tolerance for rework has evaporated. The risk is not adopting AI—it is standing still while competitors move ahead.

AI is not hype. It is the next readiness system in hardware design—like CAD in the 1980s or PLM in the 1990s. AI does not replace engineers; it multiplies them. It gives them lift.

Further reading:

5 Ways AI Will Revolutionize Hardware Design in 2024 – Built In

Hardware Design and Verification with Large Language Models: A Review – MDPI

Google Case Study: Cloud-Based Static Sign-Off Methodology for TPU hardware – Real Intent

A Practical Guide for AI-Driven Hardware Engineering – CoLab Software

Diode Computers is Designing Circuit Boards with AI – Business Insider